The patient is lying on the table in front of me, bright fluorescent lights shining down on her blood-stained clothes. I know she’s been in a car accident, but I have no idea of the extent of the damage yet. I step closer to the table and glance at her vitals on the screen next to her. My scrubs-clad team stands nearby, waiting for my instructions. I wonder where to begin, trying to shut out the sounds of the emergency room around me.

Suddenly, the patient begins to convulse—she’s vomiting. If I don’t make a move soon, she’ll choke and die. Her skin is turning a sickly bluish-gray.

Finally, I remember what I’m supposed to say: “Check for breathing.” One of the doctors jumps forward to follow my command.

Gently, I lift the goggles off my eyes, blinking as I come back to reality. The ER, the patient, and the other doctors have all vanished, and I’m standing in the MedStar Institute for Innovation office, surrounded by the people who built this virtual-reality program from scratch. With the help of headphones, a microphone, VR goggles, a handset controller, and laser sensors on the wall that followed my every move, I’d been thrust into an “emergency” where the commands I gave and the clinical decisions I made determined whether the patient lived or died.

Though MedStar’s Simulation Training & Education Lab (SiTEL) has been laying the foundation for its virtual-reality program for the past four years, it isn’t ready to be used for clinical training. What I sampled is an indication of what it hopes the program can do. A team of staff video-game developers is working to improve a similar program in preparation for its showcase at a conference for emergency physicians in October.

Most people probably associate VR with video games. But as the price has come down—from goggles that cost tens of thousands in its early days to $800 models such as the HTC Vive headset I sampled —its use is growing. Walmart recently announced plans to use VR to prepare employees for Black Friday crowds, while NFL teams use it to give players some injury-free practice.

Meanwhile, hospitals in the Washington area, such as those under MedStar, are finding ways to apply the technology to improve physician training and patient experiences.

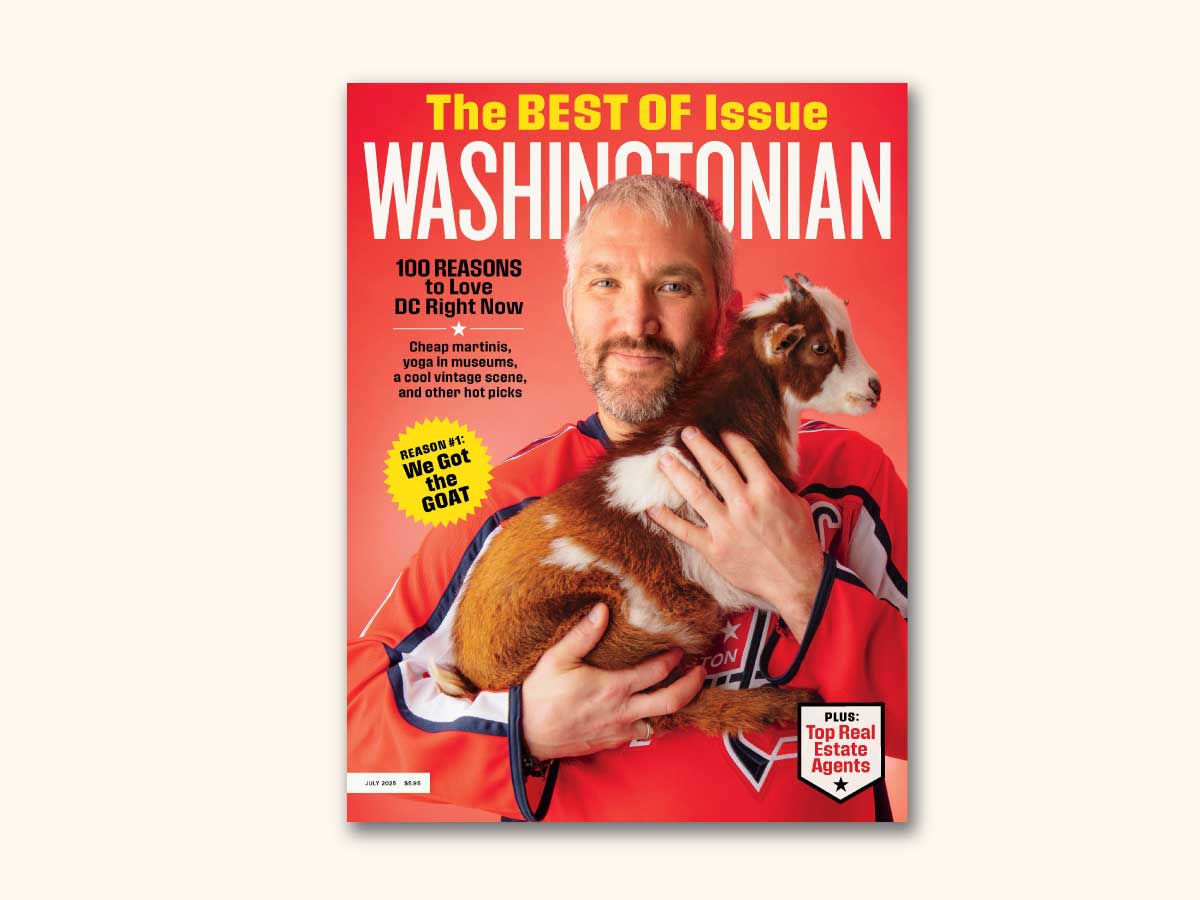

The trauma program that MedStar is developing is called Trauma: Yellow. “The objective was to help emergency physicians and trauma surgeons better manage the first 30 minutes or so of a trauma case in the emergency department,” says Bill Sheahan, director of MedStar SiTEL.

Before dropping an inexperienced resident into the ER to make decisions about a patient’s care when a real life is on the line, SiTEL has created simulations for practice runs.

The idea of practicing in a simulation before working on a patient isn’t new. MedStar already has residents practice on high-fidelity human simulators—human-size mannequins that have a pulse and can breathe, be intubated, or have an IV placed. Those mannequins don’t come cheap: Sheahan says each costs the hospital more than $100,000, and while they’re great for training, only a few doctors can use a mannequin at one time. By contrast, any number of people could play Trauma Yellow at once, with additional pairs of $800 VR goggles.

Now that MedStar has Trauma: Yellow’s infrastructure in place, Sheahan says it will be faster for developers to adapt it to other situations. A second game that allows users to practice using a defibrillator took SiTEL only 2½ months to build.

Although the medical world traditionally lags behind other industries in tech innovation, it’s a field that stands to benefit greatly from the technology. In a 2015 NPR interview, Thomas Furness, a professor of industrial and systems engineering at the University of Washington—known as the “grandfather of virtual reality”—said he saw health-care training as a major future application of the technology. While Furness’s work in virtual reality is rooted in building better operational cockpits for fighter pilots, there’s just as strong a need for zero-consequences practice in medicine as there is in the cockpit. What’s more, while hospitals across the country have experimented with using virtual reality for pain management and PTSD therapy, when MedStar unveils its training program in October, Sheahan believes it will be the first of its kind.

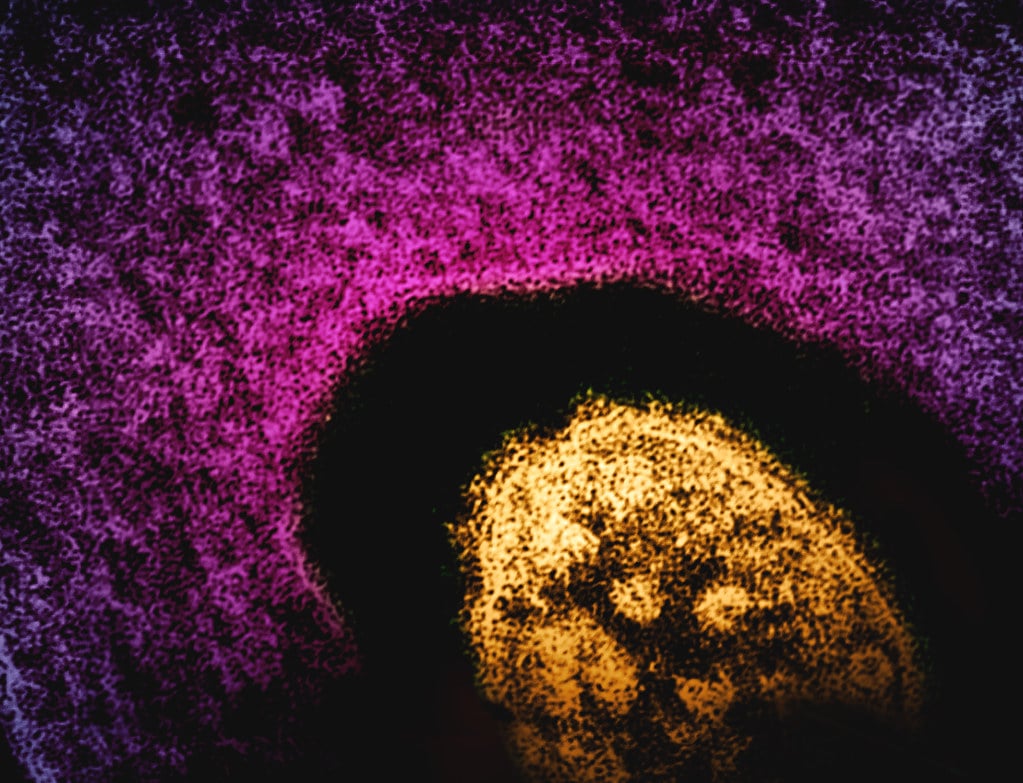

MedStar isn’t the only local health-care provider employing VR. At George Washington University Hospital, the neurosurgery department is using a program called Surgical Theater, which neurosurgeon Jonathan Sherman started looking into after seeing a presentation by a UCLA doctor. The program takes a two-dimensional MRI image and translates it into a 360-degree model of a patient’s head, which can be viewed through VR goggles. The program not only allows surgeons to plan operations, zooming in past layers of skin and bone to look inside a patient’s brain or spine; it can also provide medical residents the opportunity to learn and can give patients a better sense of their own anatomy.

It was amazing seeing what was inside my brain, seeing how big the tumors were.

One GW patient, Roodelyne Jean-Baptiste, a 31-year-old security guard from Fredericksburg, Virginia, had already undergone two brain surgeries to remove tumors when her doctor, Sherman, informed her she had two large tumors that had grown back and needed to be removed. Though he showed her the black-and-white MRI image and explained why surgery was necessary, Jean-Baptiste wasn’t keen on going under the knife again. It wasn’t until after the operation, when Sherman gave her a look at the tumors via VR, that she fully grasped the urgency.

“It was amazing seeing what was inside my brain, seeing how big the tumors were,” Jean-Baptiste says. “If he had shown [the VR] to me first, he wouldn’t have had to waste his breath so much to actually convince me to have the surgery.”

For brain surgeons, Sherman says it’s second nature to take an MRI and turn it into a 3-D model in their heads so they can plan the exact trajectory of a surgery. But for patients, it can be much harder to translate a 2-D image into 3-D. While the Surgical Theater program can be useful for physicians, GW neurosurgeon Anthony Caputy thinks its biggest potential is helping patients be better informed.

“Patients always smiled and nodded and kind of seemed like they understood, and in retrospect I don’t know how much they really understood,” says Sherman. “I can tell now that I’m walking patients through their own anatomy with a 360-degree virtual-reality view. I can show them on their skull in the VR setup where I’m going to make an incision.”

While studies have shown that better-informed patients are more likely to be satisfied with the results of their surgery, I had to wonder if VR is just an overpriced, flashy toy that may add to the cost of health care. But given that patients have their choice of Washington-area hospitals, the hope is that the flashiness is part of the attraction, says GW neurosurgeon Michael Rosner. For example, he explains, the fact that Johns Hopkins currently has an intraoperative MRI, which creates images of the patient’s brain during surgery, could be a draw for patients—at least until GW gets its own intraoperative MRI.

In some cases, the VR technology that hospitals are using is literally toys. At Children’s National, pediatric anesthesiologist Julia Finkel is studying how VR can be used to reduce the experience of pain for pediatric patients undergoing bone-marrow transplants. In this case, the VR goggles are off-the-shelf models loaded with games such as Minecraft and Jurassic Park.

Finkel is currently running clinical trials, funded by the Hope for Henry Foundation, to determine best practices for using VR with patients. Ultimately, the goal is to reduce the need for opioids, which, though effective, aren’t always managed well.

“Moving away from some of the medications we use to manage pain helps diminish the side effects,” says Finkel. “Also, the patient experience is enhanced. The kids universally enjoy the VR, and it does have anxiety-alleviating effects. Even if pain management is our goal, the side effects of VR are all positive ones.”

Sibley Memorial Hospital is beginning to look into providing VR headsets to patients as an “escape” from chemotherapy treatment, says Nick Dawson, executive director of Sibley’s Innovation Hub. Patients would be able to request a mobile VR cart, then be transported anywhere in the world through Google Earth images. Sibley also wants to partner with the Smithsonian to create recorded virtual tours of the museums for patients to enjoy.

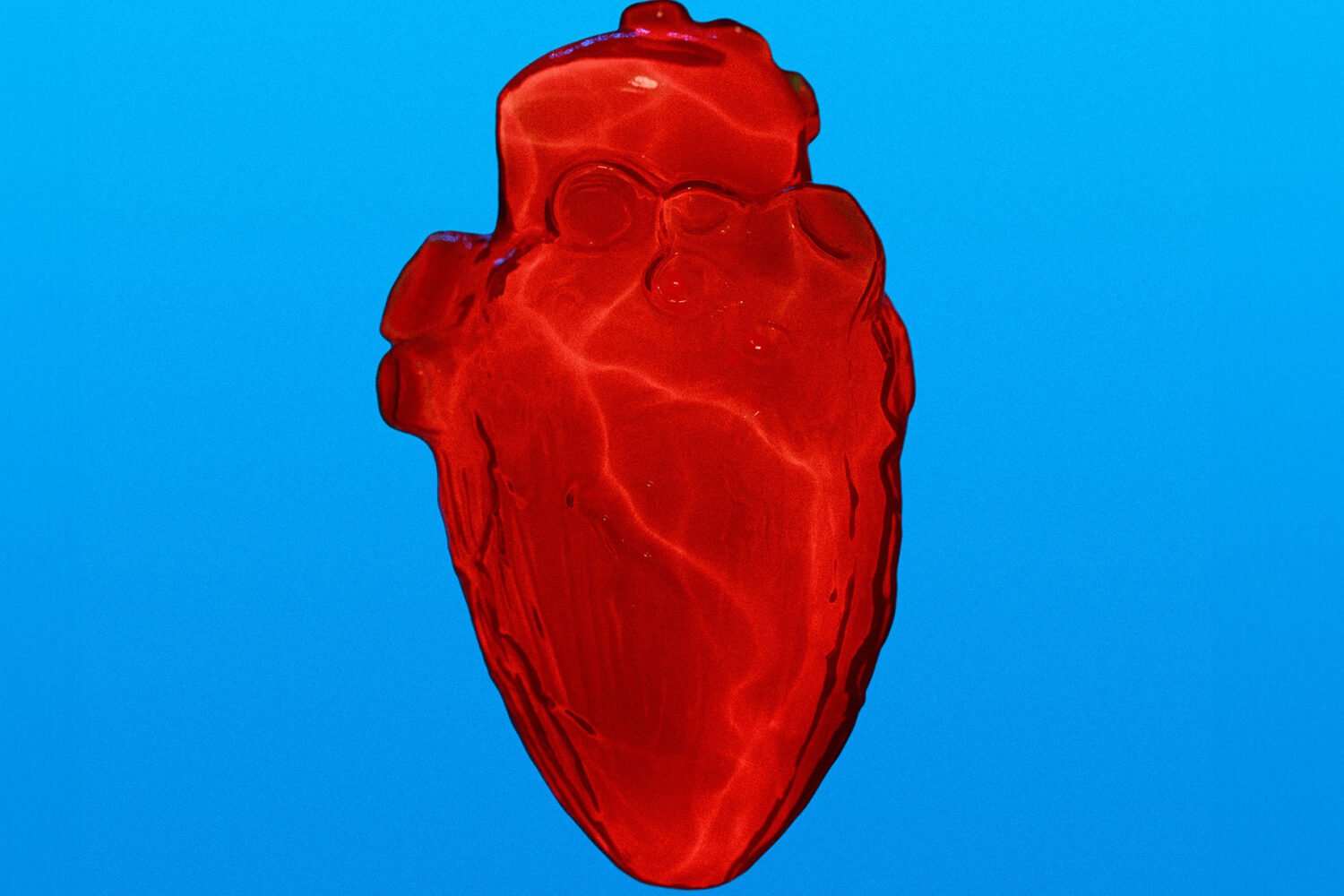

While time and more research will clarify how effective VR is in distracting patients from pain, some, including Rosner and Sheahan, see its limitations for training the next generation of physicians. Sure, it’s great for looking at human anatomy, but there’s nothing tactile about it—a brain surgeon can use VR to look at what’s beneath the skull but can’t experience the feeling of drilling through bone.

Where VR’s real potential in medicine may lie is in a combination of the physical and the virtual. For example, MedStar’s defibrillator training program incorporates a CPR dummy that, through the VR goggles, looks like a real human. By combining the dummy and VR, physicians can go through the movements of placing defibrillator pads while seeing the “patient’s” vitals via the goggles.

There’s a name for this combination of virtual and real: augmented reality. In 2015, Microsoft made a splash with the announcement of its “mixed reality” product—the HoloLens, which generates holographic images over the real world around you. With time, devices such as the HoloLens could be used in surgery to provide “x-ray vision” so a doctor could see how what he or she is doing affects the patient’s body in real time—an application being explored by a UK product-development company.

Says Sherman: “The conversion of virtual to augmented reality is where this can really have the most value across disciplines.”