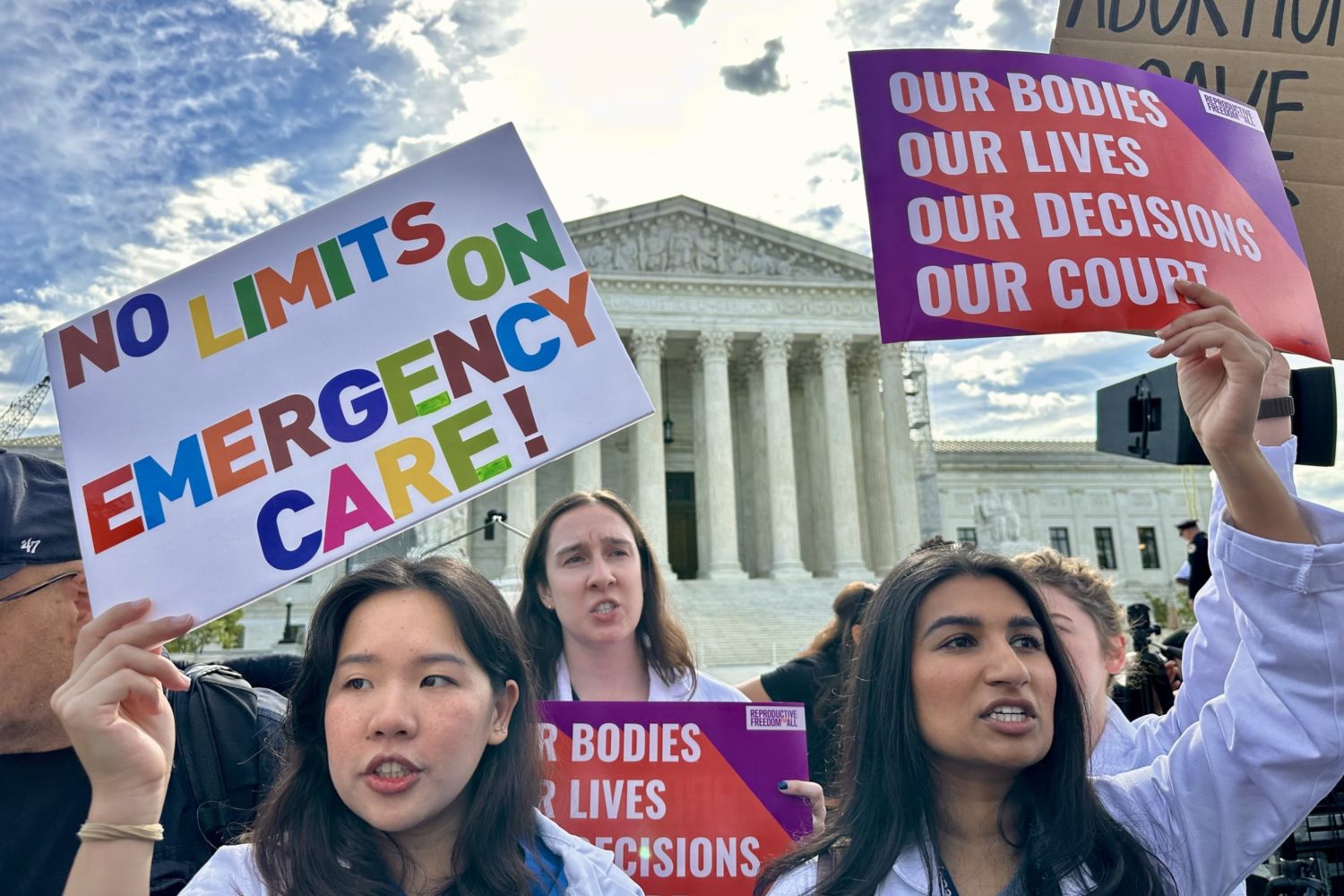

The Supreme Court began hearing arguments Tuesday for Gonzalez v. Google—a landmark case that could bring significant changes to the internet as we know it. The backstory: In 2015, Nohemi Gonzalez, a California college student studying abroad, was killed in the November terrorist attacks in Paris. The following year, her family sued Google for the role its algorithm has played in recommending dangerous content—like ISIS videos—to YouTube users.

Why is this case a big deal? Because it could break the internet. Under current law—Section 230 of the Communications Decency Act— companies are pretty free to moderate platforms as they please. The court getting involved could mean big changes.

We spoke to Michael Carroll, director of the Program on Informational Justice and Intellectual Property, to break the technical case down into simple terms—terms fit for your golden retriever.

What is Section 230 of the Communications Decency Act?

“This is a provision that was added to the Communications Act of 1996. It has two parts: Part one is that you don’t treat a provider of interactive computer services (social media) as the publisher of information provided by users. Basically, this arose back in the day of chat rooms. If somebody posted a defamatory chat, the service provider would not be treated as the publisher of that. Whereas if there’s a defamatory quote in the newspaper, or in the Washingtonian, the law will treat them as the publisher of that statement. Part two is, if the service provider chooses to take down harmful content, you can’t sue them for that decision either.

So, Section 230 is sort of a two step. We’re going to immunize you if you don’t take it down, but we’re also going to immunize you if you do take it down.”

What does Gonzalez V. Google have to do with Section 230?

“The ultimate claim is that the social media providers helped ISIS recruit people who carried out a terrorist attack in Paris in 2015. But Google, on behalf of YouTube, said ‘Wait a minute, you’re trying to treat Google essentially as the publisher of ISIS videos.’ You can’t do that under Section 230.

Google’s argument is that they only provided an automated algorithm that all YouTube users are familiar with. It either suggests videos that you might want to watch, or automatically loads follow up videos to play. Google argued the content all came from ISIS. The fact that their recommendation algorithm just tees up a thumbnail doesn’t make it Google’s content. It’s still third party content, and therefore they’re not liable. But Gonzalez is saying Google should have legal responsibility.”

Many of the Justices on Tuesday seemed concerned that if they were to find Google guilty, it could open the law up to an onslaught of suits against media companies. What do you think?

“You’ve heard a lot of this sort of rhetoric, that this case could ‘break the internet.’ I think what social media companies are saying is, ‘Look at the scale of our activity. Look at how many users we have.’ Once you open the door to a lawsuit for one user, you open it up to all of them. And there are going to be plaintiffs and lawyers out there chomping at the bit to bring a suit.

They’re basically saying there’s no in-between position once you decide that this immunity has cracks in it. Matching users and videos through the recommendation algorithm is a core part of what a social media platform does. Think about all the different lawsuits that people will be able to dream up when they’re matched with harmful content.”

If they impose limits to social media companies’ legal immunity, would it change the entire way they do business?

“I think Supreme Court Justice Elena Kagan really voiced the court’s problem. She says: on the one hand, social media has grown up into a much bigger, more sophisticated business than in the 1990s. And it seems like every other business has to take responsibility when its services hurt people, but social media is off the hook because of this statute. That seems too broad. But on the other hand, how do we draw the line? Where do we say, ‘This lawsuit can go forward, but that one can’t?’ Kagan said, ‘You know, these are not like the nine greatest experts on the internet.’

How would these limits impact internet users? Supreme Court Justice Amy Coney Barrett expressed concern that this could result in social media users being held liable for what they retweet or share online.

“Think about the case of office gossip. You’re in the office, and someone comes to you and says, ‘Hey, did you know that Bob’s has been stealing from the company?’ Then, you don’t do any investigation, and you just recklessly turn around and tell someone else. Then you’re actually liable for defamation because you are republishing a defamatory statement.

But when the same thing happens on social media, you’re protected. Because Section 230 says no provider or user of an interactive computer service should get treated as the publisher of content provided by (a different user). That’s where Justice Barrett was. On social media, people say stuff. They retweet. Stuff goes viral. You’re protected even if what you’re sharing would otherwise be the basis for a lawsuit. Section 230 is protecting you as much as it’s protecting Google and Meta and Twitter.”

How else will everyday internet users be impacted if Google is found guilty?

“That would have a big impact on social media’s free-flowing ability to connect you with content. You’d have to work harder to find new content as a user. Maybe that’s good, for the person who’s addicted to TikTok. But it would definitely change the calculus those companies make about how to, in their view, enrich the user experience by giving you suggestions of stuff you might like. The lawyers would get much more involved in the engineering decisions and the business decisions.”

Then the big question is: Where to draw the line?

“When push comes to shove, the court is going to look for a line to limit social media’s immunity. I think they’re going to find that they really can’t draw it confidently. They don’t have a clear picture of what kinds of lawsuits would then come through the door. I think that’s why this really should go back to Congress. Then, you’re not just trying to draw a line based on a 1996 law, you can open up the conversation to a lot of different forms of regulation.”

How would you explain this potentially internet-breaking case to, say, a golden retriever?

“This was the first time the Supreme Court looked at this basic law of the internet. And it’s a basic law because social media companies have built their businesses relying on it. They rely on it because it means they won’t get sued just for what their users are doing. I think the court is going to decide that it is not ready to make any substantial change to the law. And it definitely does not want to break the internet.”