“Hi, I’m Pepper!” says the white, four-foot tall, and surprisingly cute robot. The small iPad-like screen on its chest offers options of activities or questions you can ask. The tiniest nose and the tiniest mouth meet an angular, also tiny, chin. Its eyes, which take up about half the size of its face, light up in various colors. If you look closely, you might see the tiny blinking red light in its right eye, just one of three scanners. Pepper sees you, senses you, and can read you. Now, Pepper can create music for you from a Jackson Pollock.

A fleet of 20 Pepper robots, priced at $26,000 each and donated to the Smithsonian by Japanese tech company Softbank Robotics, were released in April as part of a pilot program in six institutions: the Hirshhorn, American Art, African American History & Culture, African Art, the Castle, and the Environmental Research Center. “I think it goes without saying, my title is obsolete,” says Kristi Delich, associate director of visitor services for the Smithsonian Institution. “It was almost a delightfully unexpected program—this is new territory for visitor services staff.” Pepper has pre-programmed answers to specific visitor questions. “She’s not like a Siri or an Alexa as of yet,” Delich says. “If a visitor asks a question that Pepper doesn’t know, we go in and add that.” Although many Peppers answer basic FAQs and fun activities (like dancing or exhibit-related storytelling), a handful of them are gaining more advanced abilities.

ARTLAB+ educator Ian McDermott is the programmer behind Pepper’s latest skill. He created a system that allows Pepper to scan an image—let’s say, of a Georgia O’Keeffe floral painting—and, through a few steps, turn its colors into sounds. He showcased Pepper’s new talent at SAAM’s Technology Family Day this past Saturday, with 50 works by 10 painters (from Pollock and O’Keeffe to Jacob Lawrence and Nam June Paik) programmed into the robot.

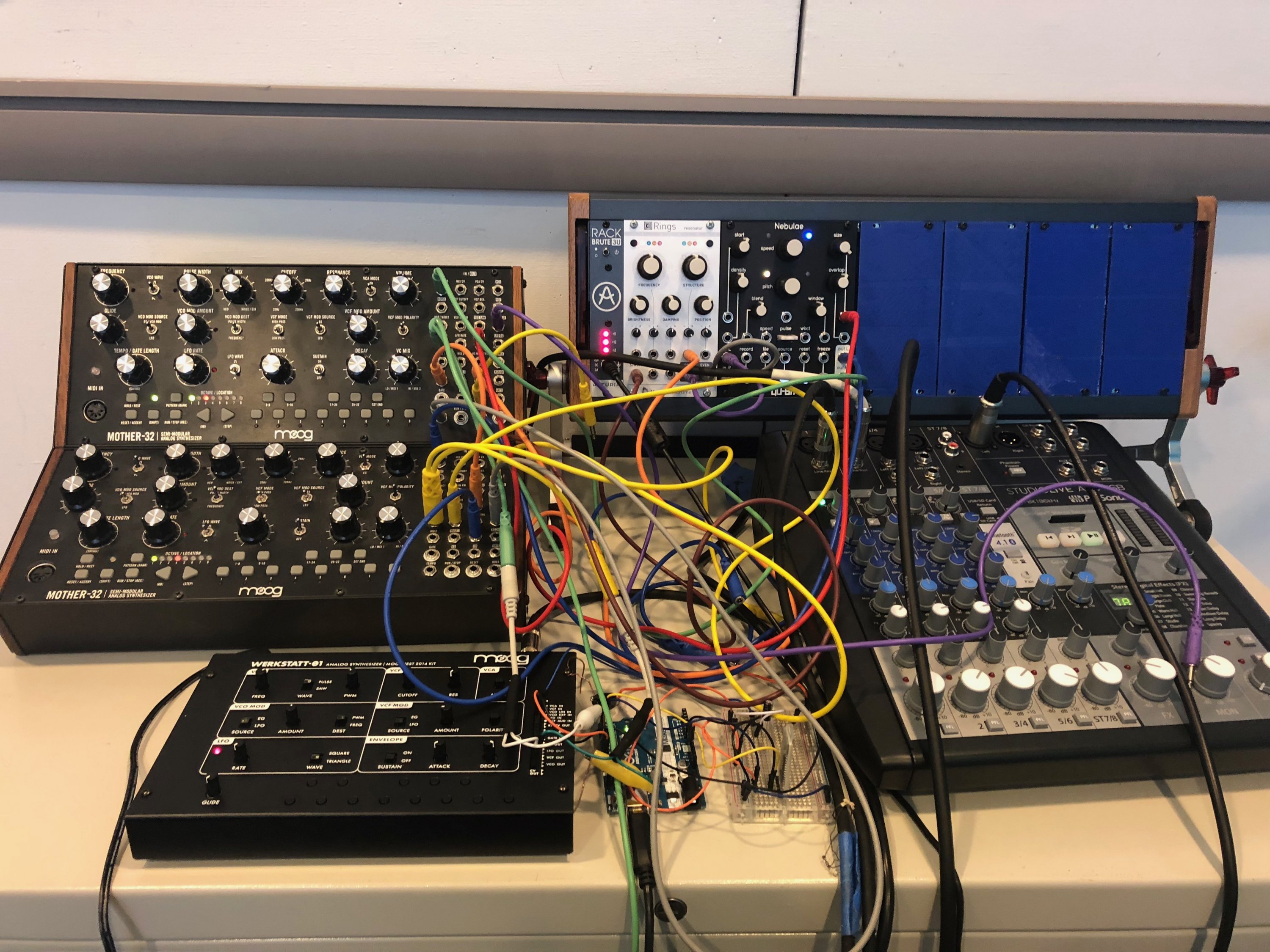

To pull this off, Pepper brings up an image of the painting on its chest screen. McDermott created a small Bluetooth transceiver that Pepper holds in its hand and glides the device back and forth across the screen, picking up the red-blue-green light to register the colors. The colors are then sent (via Bluetooth) to an Arduino, which McDermott explains is like a tiny computer with just one function: translating the light/color data into electronic pulses. Those pulses are turned into sounds with a complex system of synthesizers, with changes in note and frequency depending on the colors shown and McDermott’s own tweaking.

Paintings aren’t the only thing that Pepper can take into account: with emotion detection software in those bug eyes, Pepper can read a Facebook scale of facial reactions (happy, sad, angry, surprised, and neutral). McDermott has grouped Pepper’s pre-programmed paintings by emotion, so that when Pepper sees your reaction, it can show you a corresponding work and play its song. The next step will involve the music changing based on your reaction, like using a major scale if you smile, so you, too, can participate in the process.

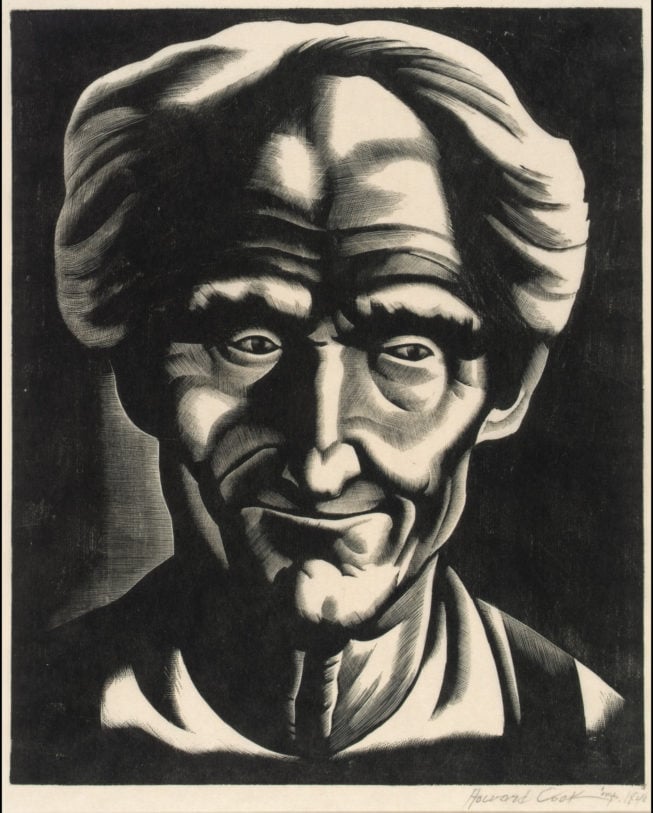

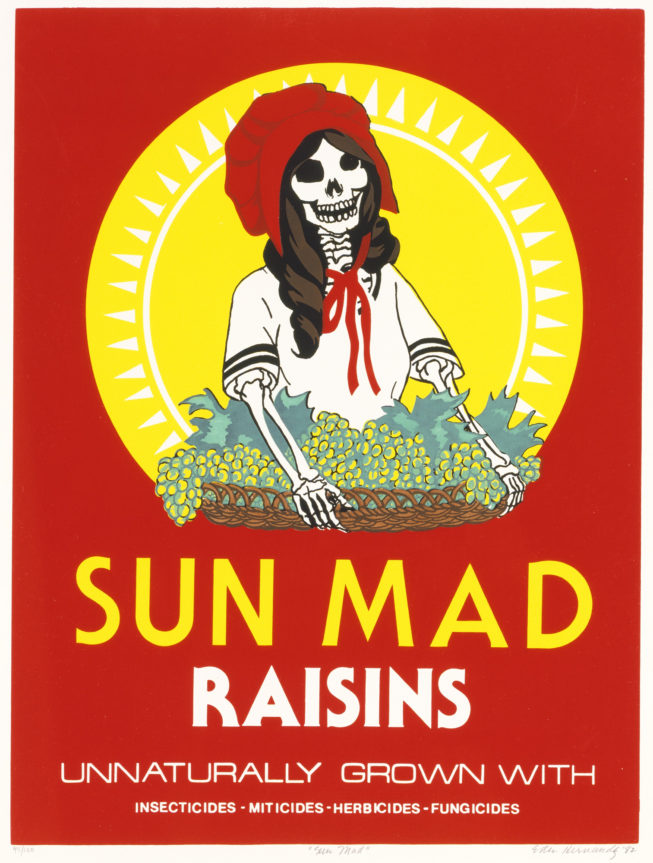

McDermott sent along three example recordings based on paintings within the SAAM collection (not currently on view): the black-and-white portrait “Happy Uncle (Old Timer),” a wood engraving on paper by Howard Cook, “Sun Mad,” a screenprint by Ester Hernandez, and “Marlin,” a painting by Joan Mitchell.

"Happy Uncle (Old Timer)" by Howard Cook, 1935. Photograph courtesy of SAAM.

"Happy Uncle (Old Timer)" by Howard Cook, 1935. Photograph courtesy of SAAM. "Sun Mad" by Ester Hernandez, 1982. Photograph courtesy of SAAM.

"Sun Mad" by Ester Hernandez, 1982. Photograph courtesy of SAAM. "Marlin" by Joan Mitchell, 1968. Photograph courtesy of SAAM.

"Marlin" by Joan Mitchell, 1968. Photograph courtesy of SAAM.Here are the tracks Pepper created from these images: