On November 22, 1963, John F. Kennedy declared to a breakfast audience in Fort Worth that America was now “second to none” in military strength. “This is not an easy effort,” he warned. “But this is a very dangerous and uncertain world.” By lunchtime, that was clear as never before. That the day’s tragedy stripped a generation of its innocence, unleashing the turbulence to come, is now a given. But the imprint of Dallas exposed an equally important conclusion: The assassination marked an early triumph—and exposed the limits—of the information age.

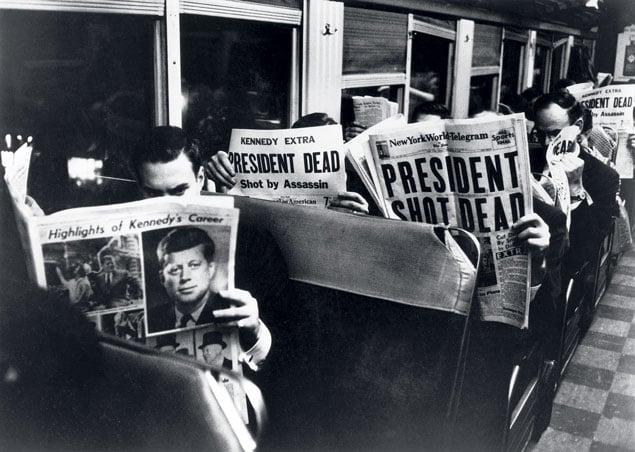

There was Walter Cronkite breaking the news in close to real time, uniting the nation in grief and sensory overload, mass media stamping the images—the Zapruder film, Jackie’s suit, Lee Harvey Oswald’s own murder, the funeral—on the public mind. In time, nearly every American had an opinion about what “really” happened in Dealey Plaza.

Today our obsession with the assassination reflects the faith that people had come to place, by the mid-’60s, in scientific and technological innovation. The century’s quantum leaps fostered a conviction that they could be put to work to accomplish just about anything. Surely the era that spawned nuclear weapons and a polio cure, NASA and The Tonight Show, would empower us to fashion a post-November 22 world that made sense. Surely it could reckon with something as gritty and reducible as a rifle murder.

Naturally, as would become the norm, a witness was taking home movies. Silent, dreamily colored, Abraham Zapruder’s film established an immovable Euclidean grid for investigation: 26 unimpeachable seconds of defined time and space. With that as our guide, couldn’t forensics, ballistics, acoustics, spatial mapping, and spectrographic analysis resolve a question as simple as whether the President had been shot from the front or behind? The problem, of course, is not only that time travel remains out of reach. The very advances that inspired this faith swiftly became mired in the swamp of specialization and experts for hire. Trial lawyers have a saying: You get your doctors, we’ll get ours.

As with much in the information age, the inquiry into JFK’s murder long ago turned adversarial, pitting lone-gunman proponents against conspiracy theorists: an argument in perpetuity. On both sides, the obsessed have welcomed each new breakthrough chiefly as a vehicle to help them “solve” the crime: You get your ballistics experts, we’ll get ours. Nowadays, anyone left talking about Oswald and his rifle, the grassy knoll and the motorcycle recording—all the pieces stuck like flies to Zapruder’s grid—swiftly overwhelms the layperson with technical arcana. In the information age, both sides come well armed.

The post-World War II era seemed to promise an upward trajectory of benign rationality, a collaboration of Big Government and Big Academia that would make sense of the inexplicable. Instead, the assassination taught America a painful lesson: No matter how far science and technology advance, some things will remain unknown and, worse, unknowable. What “really” happened, in almost any case, can remain elusive.

JFK’s death reminded us that the world will forever be a mysterious, even mystical, place. Those most fiercely devoted to the assassination as a subject of inquiry exhibit the hubris of believing they can transcend this reality. And that makes for a dangerous and uncertain world.

James Rosen is chief Washington correspondent for Fox News.

This article appears in the November 2013 issue of Washingtonian.