The shock of Donald Trump’s victory seemed to overturn everything we thought we knew about presidential campaigning, especially the science of polling. In a year that appeared to belong to data nerds, armed with algorithms and demographic models, nearly every poll was wrong. It’s a moment to turn to John H. Johnson, cofounder of Edgeworth Economics and author of the recent Everydata: The Misinformation Hidden in the Little Data You Consume Every Day. Johnson provides expert testimony in high-profile court cases, explaining huge, complex data sets in terms a lay jury can understand.

Like pollsters who have come to data’s defense since November 8, Johnson points out that many Americans ignored the polls’ margin of error and other simple concepts of probability. But he also believes that the sheer rush of data—and a little arrogance—helped pollsters miss the real story. We talked to Johnson about the polling industry’s crisis of confidence and what he’d do about it.

Before the election, pollsters were already answering tough questions because they had predicted that Britain’s Brexit referendum would fail. It didn’t. They also said Hillary Clinton would be our next President. Is polling in trouble?

I’ve heard a lot of people say data is dead. Data is not dead. Data is still a very important part of how we learn about the world. I do think the polling industry has some really big challenges ahead, the first being homing in on its turnout models.

The public sees a number like “Hillary Clinton leads Donald Trump 48 to 43 percent.” Underneath that is the turnout model, based on how the same people voted in the last presidential election, or some enthusiasm measure that doesn’t have much transparency. That’s where the polls got it wrong. There were a whole bunch of people who turned out to support Donald Trump, especially in the Midwest, who were missed.

But there is a second issue, much more fundamental to the way polling is done: how to get responses in a world without as many land lines. People aren’t answering their cell phones. They are oversaturated with pollsters calling—I can’t even imagine being a voter in New Hampshire. So the person who wants to talk on the phone is the most excited about their candidate. You could talk to a lot of gung-ho Hillary voters, while the Trump voters who are skeptical of the media or of pollsters don’t answer their phones. If you can’t correct for those, that’s a big problem.

These problems have been out there a while. Was something else different this time?

If you compare just 2012 to 2016, the amount of polling now is incredible. The last week before the election, there were two dozen polls. It’s easy, in the rush to get the latest numbers out, to miss something. It’s a classic case of having all this information, which should be giving us more, but actually losing the detail and the richness. You can’t do that when you’re looking at 20 polls in a week.

It would be better to look at a really good, reputable poll and see what we can learn from it and treat it as a snapshot—as opposed to making it an input into a forecasting model that’s going to give me some probability that appears to tell me there’s X percent likelihood that this candidate is going to win. That may be what everyone cares about, but it’s the former that we can do really well as statisticians.

I guess what I’m saying is a little bit of humility is really helpful.

So what should the story have been?

What it should have been is “We have a tightening race. There are several states that are too close. These groups of voters are pro-Hillary, these groups are pro-Trump—who’s going to come out?” That’s a more nuanced story. It’s more fun to say, “Hillary Clinton has a 95-percent chance of winning.”

After 2012, the popularity of polling aggregators like FiveThirtyEight and Real Clear Politics soared. This year was the year of the pollster. Every outlet seemed to have their star. There was a time when pollsters gave the information to the media, the media interpreted the information, and then it went to the public. Now pollsters are part of the media organization—you have people on staff conducting and interpreting the polls. You lose a little of the scientific approach, where there is more peer review. I’ve been trying to advocate for something like a data ombudsman, an independent third party who could scrutinize everything, and maybe then you educate people about what to look for.

Some have said we need to rely less on data and more on our eyes. One Democratic National Committee staffer said after the election, “We’re too reliant on analytics and not enough on instinct and human intel.” Did we fail to see what those passionate Trump rallies were telling us?

You don’t want to overemphasize anecdotes or our instincts, because they can be just as wrong. But if you go to the Trump rally in Pennsylvania and you see for yourself the anger or economic issues at play, or these core values, maybe you go look at a different sample. Or you look more closely at Wisconsin to see if those voters are there, too. Experience can guide us to where to look in the data. It can uncover the answers we’re missing. It’s a synergy between the two.

How did this surprise differ from Brexit?

Brexit had this very odd phenomenon where the phone polls were giving one direction and internet polls were giving the other, and they were doing averages of the two. At the end, they did a flurry of phone polls, and because more of the “stayers” were on the phone polls and the “leavers” were on the internet polls, the average got spiked toward staying. So sometimes there are mechanical aspects that can lead to this.

After our 2012 election, Gallup did a huge, high-profile self-reflection because they predicted Romney would win. They found that they had disproportionately missed people on the West Coast because of the time of day they were calling people. But there are other parallels. In Brexit, a lot of people wanted the result to be stay and believed it would be stay. Then it wasn’t.

How much help or hindrance is social media?

Obviously, social media is changing how campaigns reach voters. Donald Trump proved that. I wonder if there won’t be corresponding ways that polling and data collection won’t also have to rely on metrics. That doesn’t mean you can just look at all the fervent Twitter followers and say that’s a level of support. The other way is the amount of additional information we’re going to gather on people from Twitter, from Facebook. But it’s so much information that we’re going to have to work hard to make sense of it.

Data is never ready to use. Nobody ever delivers a nice little disk of data and says, “Here you go.” I have to configure it, think about what it means, what insights I can get from it. Imagine if you see there’s fervent Twitter activity in Wisconsin. Maybe that’s another way to cross-validate your poll. It’s really elaborate detective work.

What should people look for when they read polls?

When you see a prediction that says Hillary Clinton is 95 percent certain to win the election, maybe you need to dig deeper. In my book, I talk about media triggers that should make you skeptical—“four out of five” or “the average American” or “this will make you smarter.” I saw a headline recently, “One Out of Five CEOs Are Psychopaths, a New Study Says.” What it actually was: One out of five professionals in the supply-chain industry in companies with more than $50 million in revenues are psychopaths, based on self-reported data of their own characteristics—and by the way, the sample is only 261 people.

People tell me they’re afraid of math, they don’t understand statistics. But you don’t have to be good at math to read that and pause.

Where do you see data affecting people’s lives the most?

Definitely in food. We’re always looking for these kinds of patterns, and we’re very susceptible to things we hear, like eating five avocados a day will make you healthier. I’m an expert, and I’m prone to following this kind of advice. There are 2,000 studies on coffee, and it turns out that it cures and causes cancer, depending on what you read. Health and exercise, particularly for your kids: how you make your kids healthier, happier, smarter. The list of things that make kids smarter is crazy—juggling, an iPhone, glasses, listening to Radiohead.

The other area is information about the best thing to buy: what kind of car, what computer am I going to buy, the best brand of some food. What’s the source of that information, what’s the criterion? People need to train themselves to think deeper.

One thing we saw in this election was a mistrust of facts that didn’t fit people’s experience. If we get the polling right, do you think they’ll be convinced by them?

Emotion, irrationality, social scientists have struggled with this forever. When I was in high school in Buffalo, the Bills lost four Super Bowls in a row—one to the Redskins. Every year I said, “They lost last year, but this year they’re due.” It’s not true. Last year has no bearing on this year. That was my own bias talking.

There are hot-button issues like this. We find it with autism and the link to vaccines. Our best read of the science is there isn’t a link, but if you’re the mother of an autistic child looking for an explanation and you’ve seen your child vaccinated, it’s going to shape your view. To the extent people can be made aware of their own biases, the data can be informative. That’s the service I can provide. I can’t tell you what to think, but I can give a complete picture of what these numbers are telling us and let you decide.

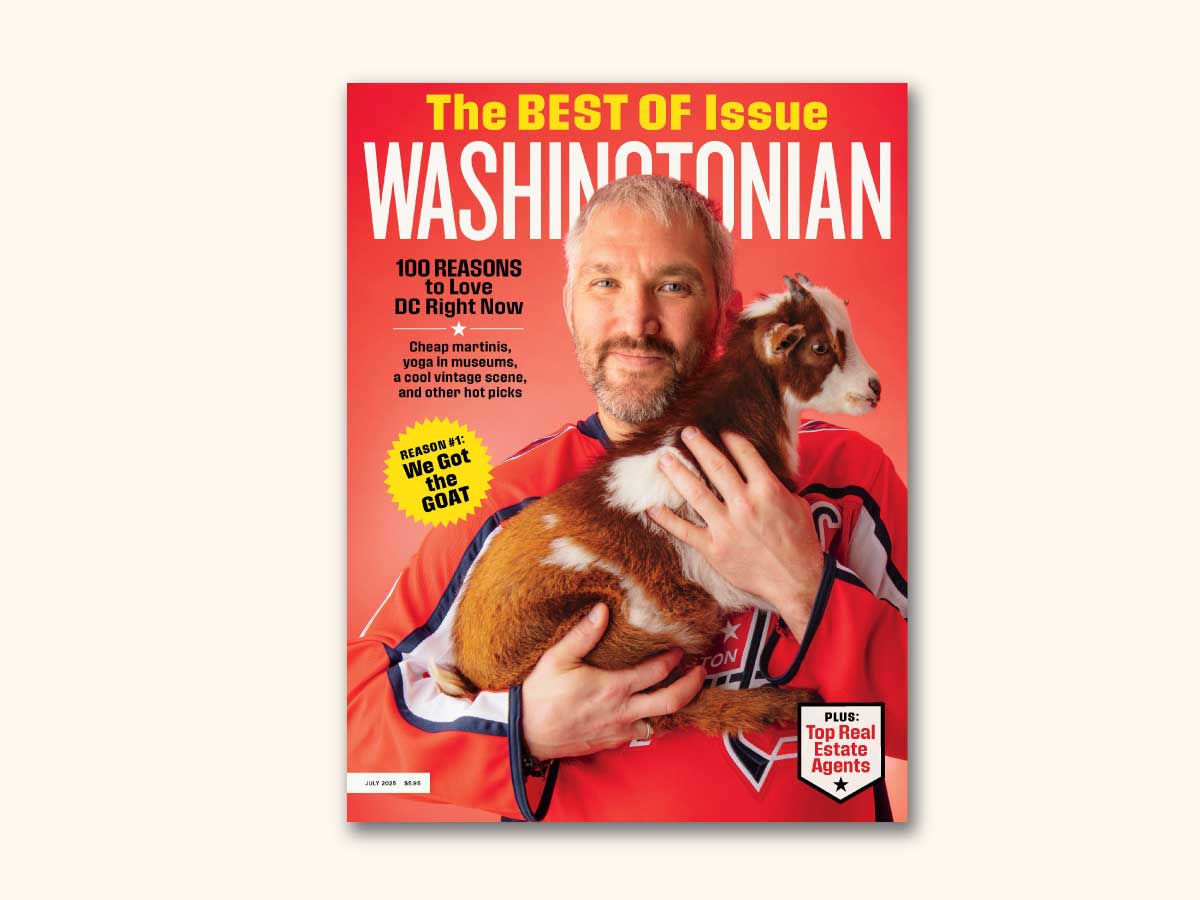

This article originally appeared in the January 2017 issue of Washingtonian.